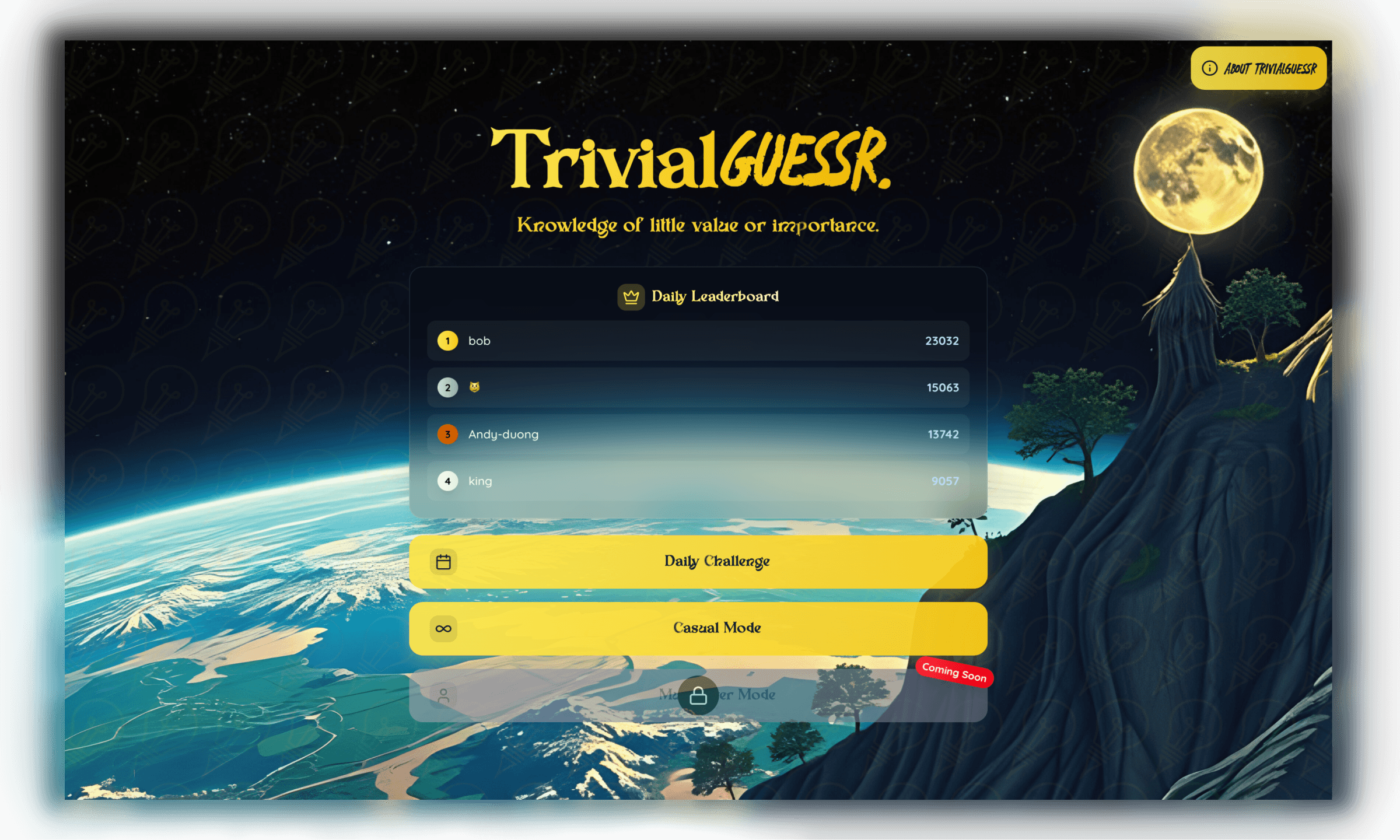

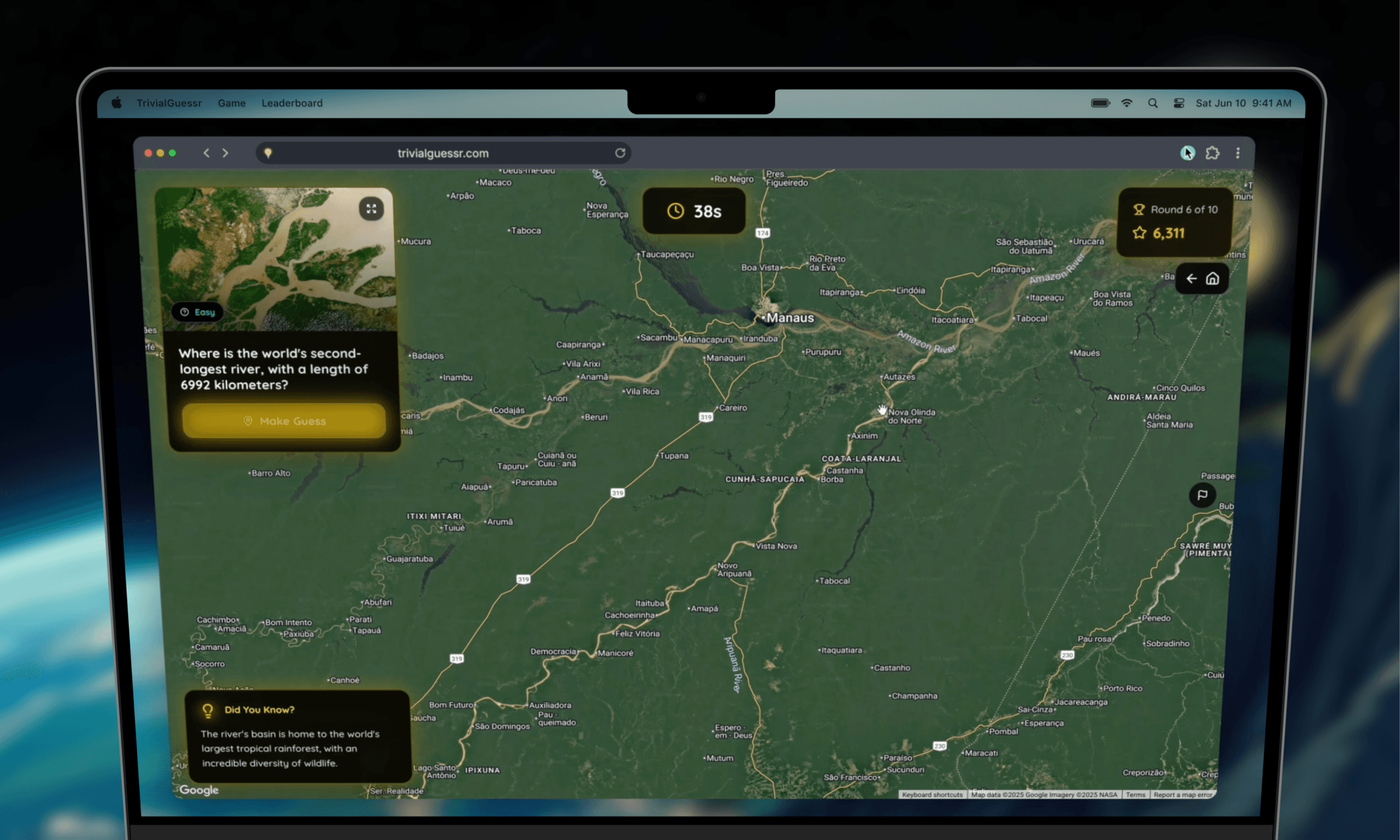

trivialguessr is geoguessr for trivia. questions are interesting trivia problems that can be traced to a city/coordinate of the world. grew to 200+ users, 2k events, and a peak of 60 active daily users within the first two weeks of launch.

andy and i wanted to ship something fast at the beginning of our 1a term. i always see takes on geoguessr, like watguessr for uwaterloo locations, so thought i'd create one for trivia.

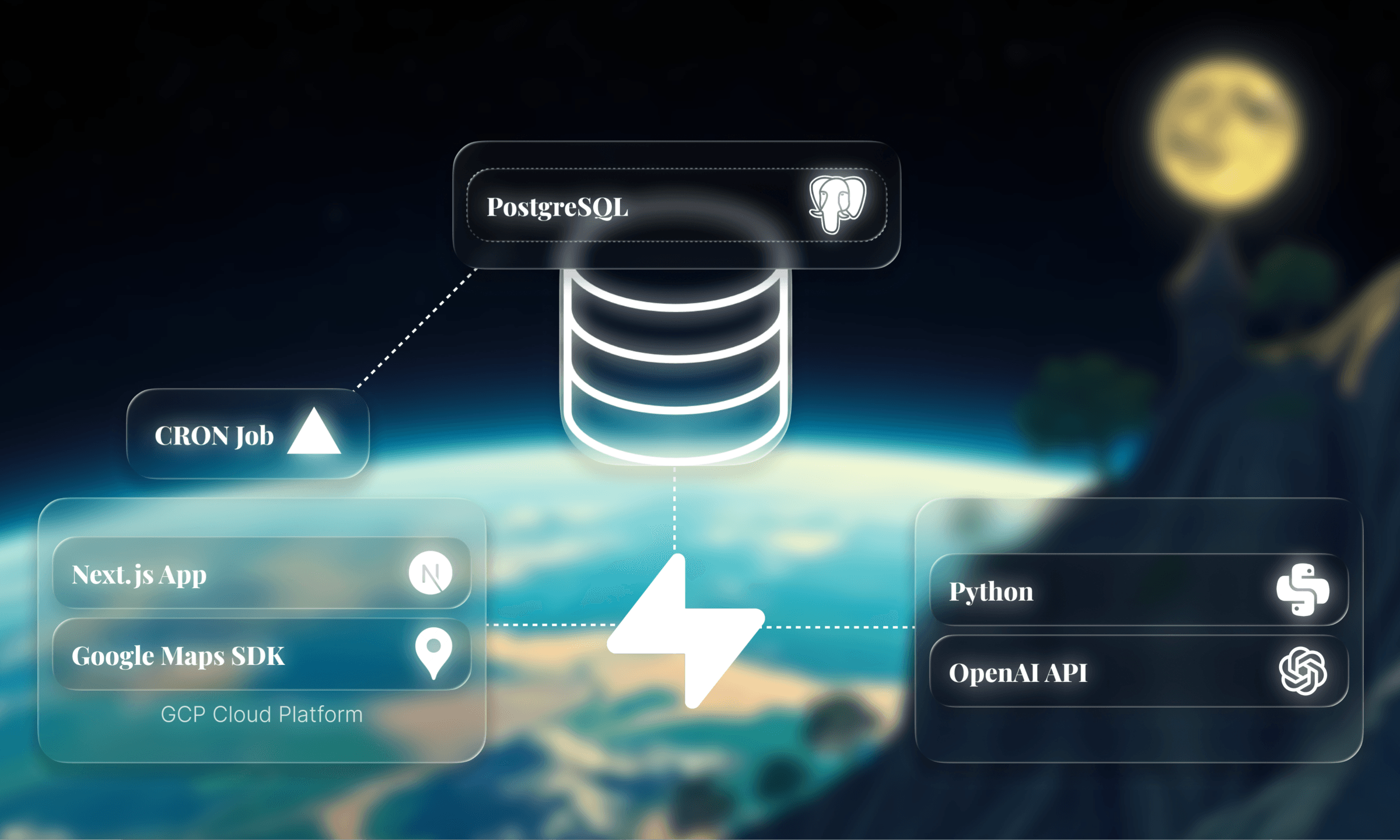

the architecture was extremely trivial itself. all we needed was a next.js web app with gcp for google maps sdk, and supabase for postgres.

the question generation pipeline was a simple llm script + image scrape with python.

every day, we auto-generate a new daily challenge. users can play anonymously and see if they could crack the leaderboard.

it's impossible to stop users from playing multiple times with no auth, but we implemented local state for piece of mind—so technically you could only play the daily challenge once.

our daily challenge data need to cleared every day at 12am est. we do this with a vercel cron job:

client/vercel.json{

"crons": [

{

"path": "/api/questions?action=daily-replace&limit=5&cron_secret=$CRON_SECRET",

"schedule": "0 5 * * *"

}

]

}

the cron job triggers two tasks related to the daily_leaderboard table and the daily_questions table:

clearing the leaderboard tablematches all rows and uses the delete() function.

server/index.tsapp.post("/api/delete-table", async (req: Request, res: Response) => {

const { tableName } = req.body;

await supabaseAdmin

.from(tableName)

.delete()

.neq("id", "00000000-0000-0000-0000-000000000000"); // guaranteed to match all rows

return res.json({ success: true });

});

this has to be done through the server because delete functions require admin permissions that cannot be granted client-side.

resetting the question tableuses delete and insert logic rather than a replace. first, it would call /api/delete-table

then it would select 5 random questions from the pre-existing question bank.

this is safe to do client-side, so we throw it into a next.js dynamic route. the logic is straightforward, with additional secret validation to restrict access to authorized cron callers only.

client/app/api/[table]/route.tsconst secret = process.env.CRON_SECRET;

const headerSecret = request.headers.get("x-clear-secret");

const authHeader = request.headers.get("authorization");

const authSecret = authHeader?.replace("Bearer ", "");

if (

headerSecret !== secret &&

authSecret !== secret &&

cronSecret !== secret

) {

console.log("Unauthorized attempt - invalid secret");

return NextResponse.json({ error: "Unauthorized" }, { status: 401 });

}

// ... clear table and replace questions

we generated ~1.5k unique questions with our python scripts:

main.py calls openai api to generate trivia questions, then scrapes images from bing, with wikipedia as fallback. the script includes anti-blocking measures like rotating user agents and adaptive delays.

scripts/main.pydef _adaptive_delay(self):

"""Implement adaptive delays based on success rate"""

self.request_count += 1

# Base delay with jitter

base_delay = random.uniform(3, 6)

# Increase delay if success rate is low (possible blocking)

if self.request_count > 10:

success_rate = self.success_count / self.request_count

if success_rate < 0.7:

base_delay *= 2 # Double delay if success rate below 70%

logger.warning(f"Low success rate ({success_rate:.2%}), increasing delays")

# Add extra delay every 100 requests

if self.request_count % 100 == 0:

base_delay += random.uniform(10, 20)

logger.info(f"Request #{self.request_count}, taking extended break")

time.sleep(base_delay)

we implement a self-adjusting rate limiter that monitors its own success rate in real-time.

when detection risk increases (success drops below 70%), it automatically

doubles delays to evade bot detection. the scraper gets blocked after ~50 requests, but with

this logic, we successfully process 1000+ images per session.

1.5k+ unique questions!

1.5k+ unique questions!when generating questions, checking for duplicates during generation would require scanning the entire database for each new question. this is extremely memory and time intensive. instead, we use batch deduplication:

remove_duplicates.py runs periodically to clean the database, using difflib.SequenceMatcher for text similarity, geopy distance for coordinate proximity, and semantic analysis for questions about the same location with different wording.

we tried to make the game as intuitive as possible. we even included a report question button for users to report faulty questions. we investigate the reported questions manually.

many improvements could be made to the game. multiplayer mode, audio and video questions, and auth could all be implemented in the future.

honestly, the architecture and pipeline for trivialguessr aren't very technically challenging at all. you can say that the project is very trivial. i just wanted to ship a fun concept quickly.

i learned alot about cron jobs and web scraping and will continue to build cooler things in the near future.